WHY I'M OBSESSED WITH TREE-TECH INNOVATION?

A Personal Research Journal on Human-Computer-Tree Interaction

Hanju Seo

Even a dozen years ago, I didn’t see myself as part of the sustainability crowd. I used to be the kind of person captivated by the romance of robotics, thrilled by the idea that a line of code could nudge a pixel into place. Passing paddies and cattle sheds felt routine rather than ecological. But after years in a city where towers and traffic replaced hills, fields, and cattle, I began to understand what I had left behind.

That shift led me to a question: How can living landscapes speak through systems once built ONLY for machines?

1. Homaesil (호매실)

Homaesil (호매실) is a planned apartment district on the southwestern edge of Suwon (수원), South Korea, bordered on one side by Mount Chilbo (칠보산)[¹] and on the other by former rice paddies. Construction began in the late 1990s, and by the early 2000s twenty-storey towers stood beside vegetable plots that had not yet been rezoned. Residents could reach metropolitan Seoul in an hour, yet cattle sheds and small barns remained part of the immediate streetscape.

My family moved in before the access roads were fully paved, and I stayed through childhood, adolescence and early adulthood. Day-to-day life combined rural and metropolitan signals: tractors started work at dawn, while the arrival of broadband & CRT monitors in local homes brought the visual culture of the “dot-com” phrase into the same timeframe.

View of Homaesil’s apartment blocks from the rocky ridge of Mount Chilbo, where I lived over 25yrs.

After school I often visited the local PC-bang(PC방)[²], an internet café that rented computers by the hour. The walk from our apartment to the café followed the similar sequence every day. First came a narrow footpath alongside a pond; next, a short stretch shaded by persimmon trees; finally, a passage through a cattle shed where Korean native bulls were kept overnight. The shed carried the smell of ammonia and fermenting feed, while the café thirty metres beyond added the smell of cup noodles.

![]()

Landscape in Homaesil

![]()

Left: school rice-planting field lesson in the paddies; Right: after-school, being at a neighbourhood PC-bang in 2000s.

After school I often visited the local PC-bang(PC방)[²], an internet café that rented computers by the hour. The walk from our apartment to the café followed the similar sequence every day. First came a narrow footpath alongside a pond; next, a short stretch shaded by persimmon trees; finally, a passage through a cattle shed where Korean native bulls were kept overnight. The shed carried the smell of ammonia and fermenting feed, while the café thirty metres beyond added the smell of cup noodles.

Landscape in Homaesil

Left: school rice-planting field lesson in the paddies; Right: after-school, being at a neighbourhood PC-bang in 2000s.

Encountering everyday agriculture and networked computing in rapid succession made it clear to me that rural and digital systems share the same physical space instead of occupying separate domains. That realisation—born from the contrasts in my own environment—later shaped my design practice: technology must acknowledge and collaborate with the living contexts in which it functions rather than try to replace them.

2. Family Forest

Following the Korea Forest Service, more than 60 percent of South Korea is forested, and approximately 65 percent of that forest is privately owned (KFS reports 66.1% as of 2020). National cadastral records list about 2.2 million individual owners.

Among them, my mother and relatives jointly hold title to what locals call a Seon-san (선산), a micro-forest on a narrow hillside in the south, topped with native pine over a secondary layer of mixed hardwoods. The parcel is commercially marginal: too small for an industrial operator yet still large enough to require periodic track repairs, storm-damage inspections and selective thinning.

Satellite snapshot of my mother’s privately owned forest in southern Korea—still the only monitoring record the family can access.

Most co-owners reside in cities and visit the site infrequently. No formal management plan exists; responsibility circulates informally when a local authority issues a request or when a severe weather event is reported in regional news. Record-keeping consists of ad-hoc photographs and brief messages shared among family members. Decisions about debris removal, pruning or access remain unresolved for months when schedules do not align.

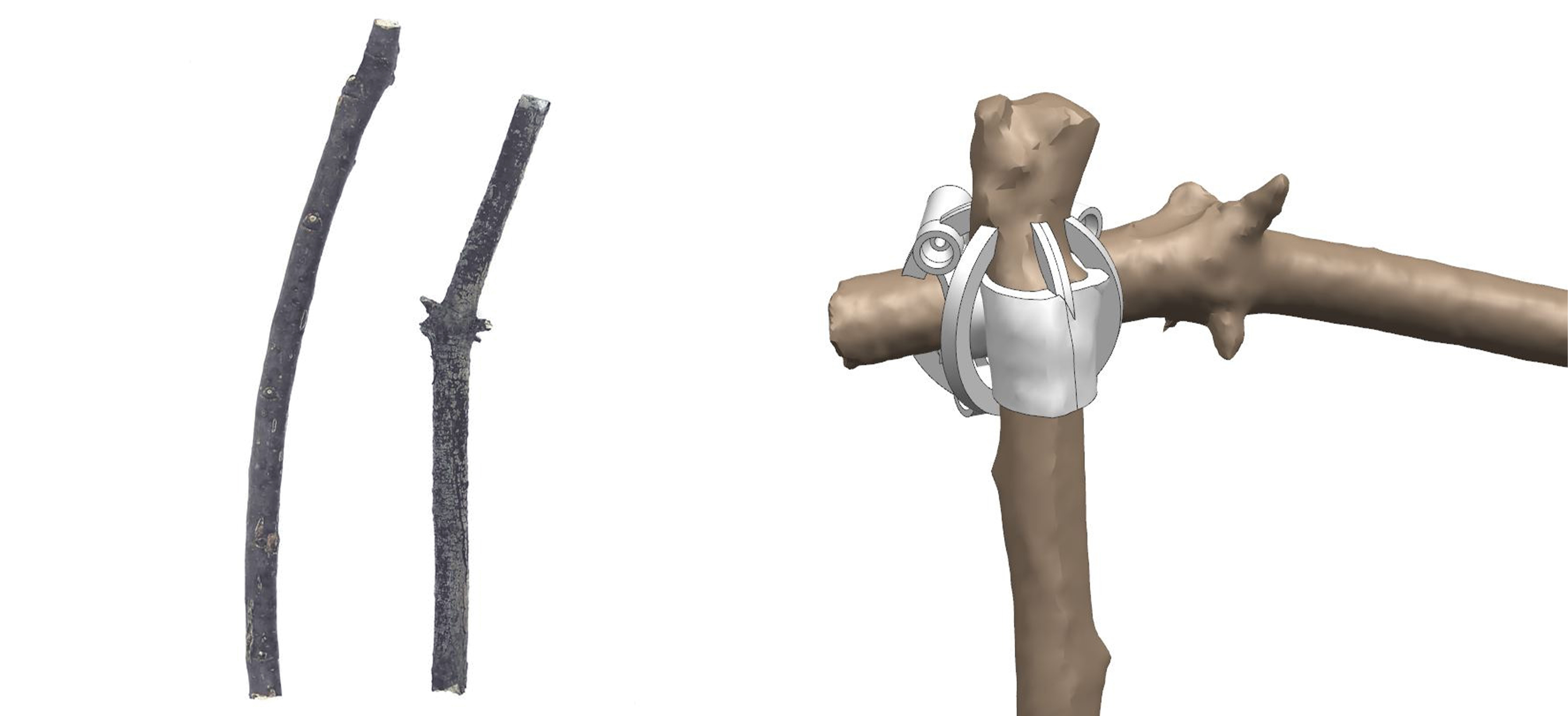

One day, during visits home I noticed the number of branches left on pavements after routine pruning by municipal crews. The material was diverse in species and irregular in form, classified by the city as green waste. I began collecting representative samples to test whether non-standard biological shapes could be captured digitally and reintegrated into precise assemblies without prior milling. The exercise was technical rather than artistic: measure the accuracy of handheld scanning, evaluate tolerance stacking in custom connectors, and determine repeatability across a range of diameters and curvatures.

The experiment did not yet address species characteristics or long-term durability, but it confirmed that irregular organic geometry could enter a digital workflow without first being normalised into rectangular stock. It also provided a practical example of how family-owned forest residues might gain supplementary value if integrated into small-scale fabrication systems. The lack of structured management in my family’s stand therefore served both as a constraint and as an initial case study for exploring tree-centred data acquisition and utilisation.

3. Digital Gleaning

Collected street-side branches beside the 3-D-printed connectors that emerged from the Digital Gleaning workflow.

Using a handheld 3-D scanner, I produced point-cloud models of individual branches, retaining surface detail down to millimetre scale. In CAD software I then generated complementary geometries, custom connectors whose internal cavities mirrored the scanned contours. Printed in polymer on an FDM machine, each connector could slide onto its corresponding branch with a friction fit and allow that branch to join another branch or an everyday manufactured object. The workflow-1. scanning, 2. modelling, 3. printing, 4. assembly-demonstrated that irregular natural discard could participate in digitally driven fabrication without first being milled or standardised.

The workflow in one frame: the branch is digitised, its bespoke scaffold is modelled and 3-D-printed, then the finished part slides back onto the wood for a precise, tool-free fit.

Two branches joined vertically by a non-invasive scaffold connector.

I described this method as Digital Gleaning, borrowing the agricultural term gleaning [³], which refers to collecting residual crops left in a field after the main harvest. In this context the “residuals” were fallen or pruned natural items that would otherwise move directly to disposal or compost. Digital Gleaning highlights three points:

Presenting the Digital Gleaning concept on stage at Design Korea 2021, Seoul, S.Korea

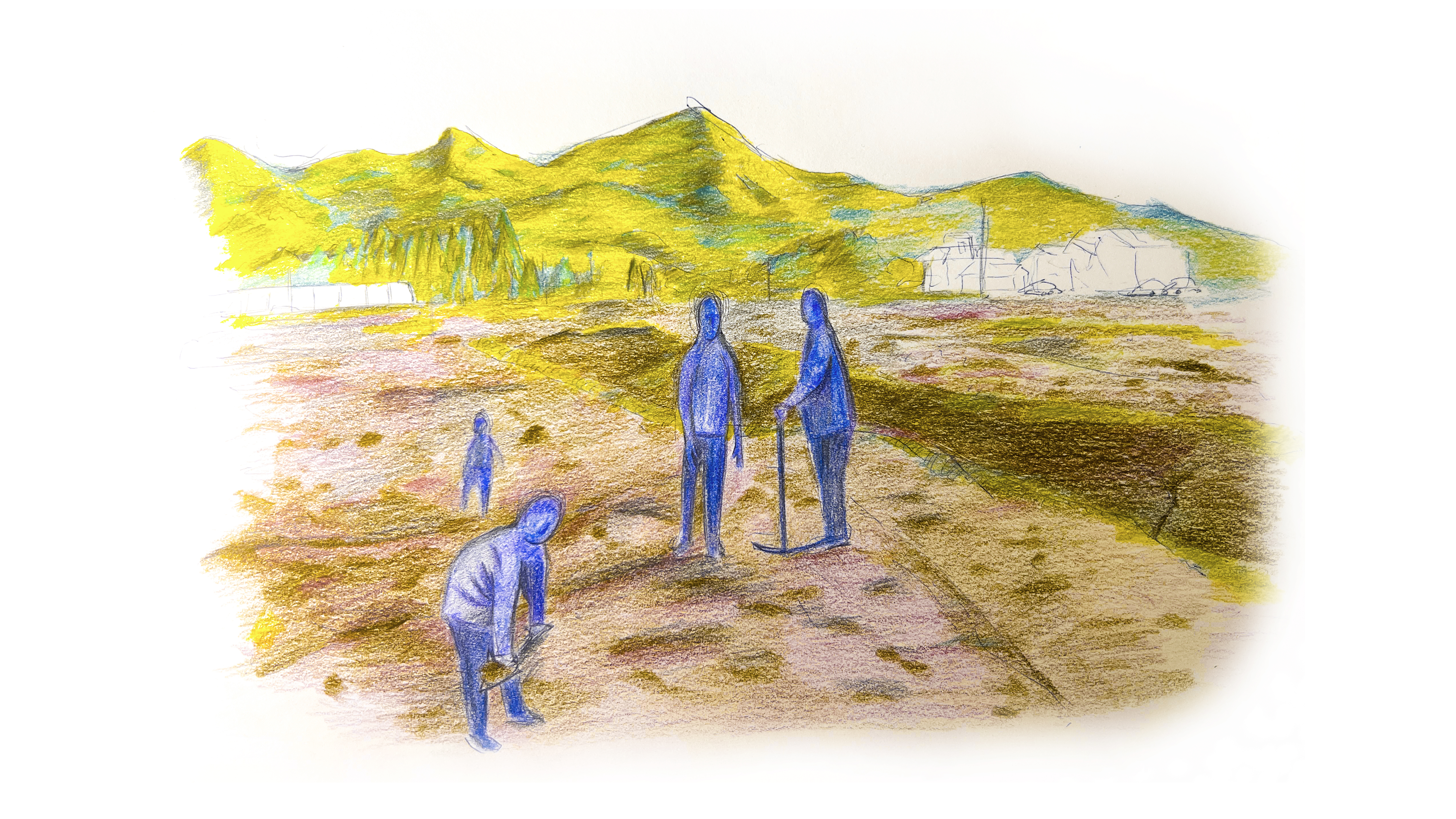

4. Learning Analog Forestry at Finland

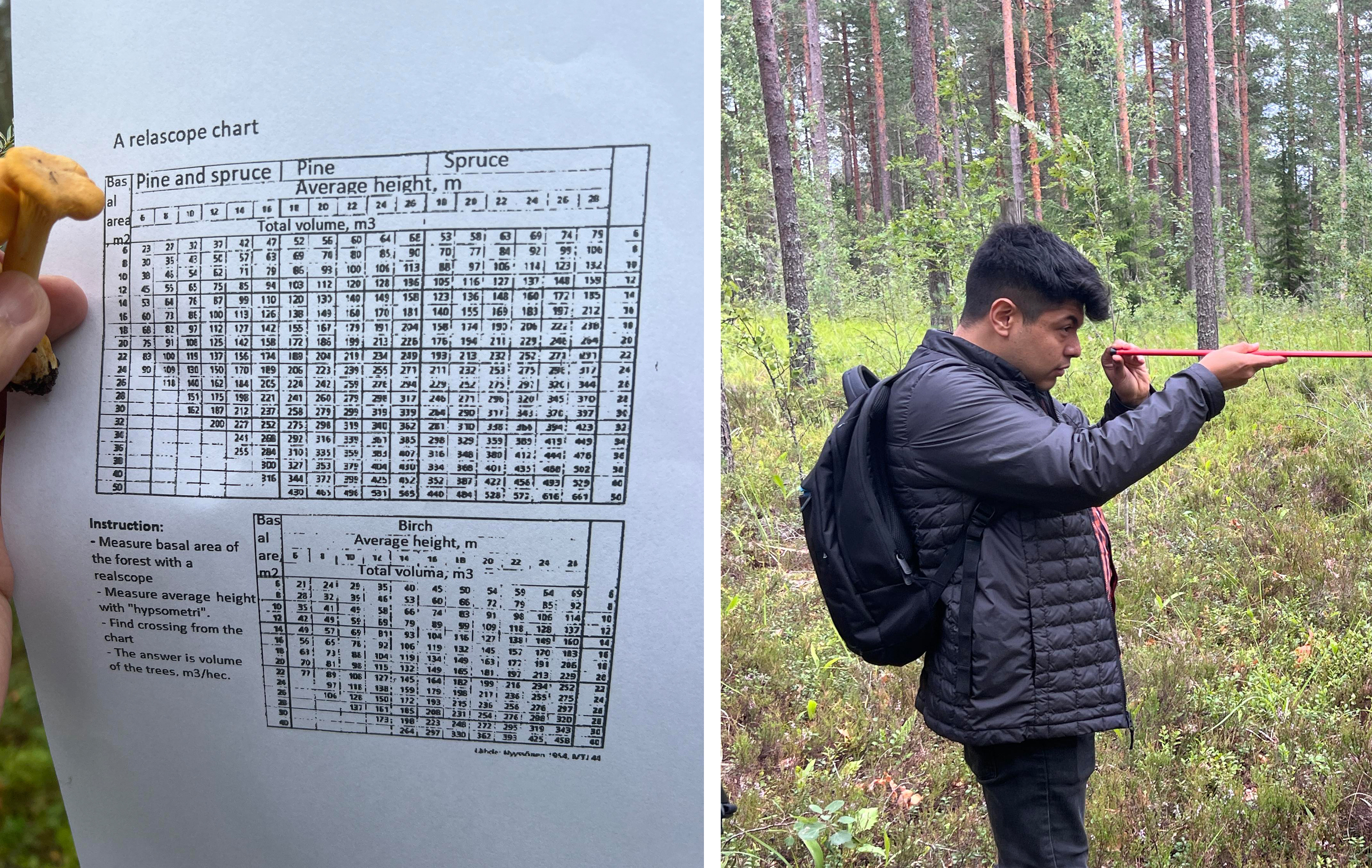

After completing a BAE degree in Design Engineering at Korea Polytechnic University, I entered the dual-degree MSc/MA Innovation Design Engineering run jointly by Imperial College London and the Royal College of Art to further explore the technical questions that had emerged from Digital Gleaning. During my first year, encouraged by the positive reception of Digital Gleaning, I applied to Sustainable Wood Futures, Aalto University’s summer school in Finland, and was humbled to be selected as one of four RCA students to receive support. The programme combined remote seminars with a two-week residential field course, pairing introductory lectures on silviculture with hands-on forest measurement in mixed conifer and birch stands.

Field exercises at Aalto University’s summer forestry school from Evo forest: learning traditional tools for height, diameter and basal-area sampling.

Aalto’s field instructors taught us with well-worn analog tools: a clinometer to gauge height, a Biltmore stick for diameter at breast height, and a Relascope for sampling basal area. Their deliberate routine—reading each measurement aloud, entering it in a waterproof notebook, then typing it later into a spreadsheet—carried the quiet authority of craft passed from one generation to the next.

Equally revealing were the conversations with local private forest owners who volunteered for several sessions. Like my own family’s bond to our Seon-san in Korea, they saw their parcels not only as sources of income but as cultural legacies inherited from their forebears. Most of them were older and cautious about high-tech solutions, favouring familiar instruments they trusted over unproven sensors. Their stance highlighted a vital design lesson: any new tool must honour existing practice while offering a clear reduction in labour.

I noted in my journal that the forest “already contains the numbers; the obstacle is the interface.” That entry became the starting point for a formal research direction aimed at moving from object‑centred experiments—Digital Gleaning’s branch connectors—to tree‑centred data acquisition.

5. Human–Computer–Tree Interaction

Back in London the idea matured into a framework I refer to as Human–Computer–Tree Interaction (HCTI). The premise is straightforward: treat every tree as a data node whose biological state can be queried, interpreted, and returned to stakeholders through an appropriate device layer. HCTI seeks to integrate sensing hardware, computer‑vision models, and decision interfaces while preserving field practicality.

Testing a Meta Quest headset prior to tree-measurement trials in a park, London, UK

An opportunity to test the concept arrived when Meta, PwC, and Innovate UK announced a XR hackathon. I assembled a small team of mates and software engineers(Zumeng Liu, Bilal Ahmad, Dzhamal Alanov, Congee), obtained a Meta Quest 3 headset, and built a weekend prototype called ForestFlux.

The workflow was as follows: the headset camera captured a full‑colour image of the target stem; an on‑device AI model identified the species; the user marked bole base and crown tip with the handheld controller to derive height; a circular sweep around the trunk gave DBH; the application then estimated above‑ground biomass and carbon storage using species‑specific allometric equations. All core code was written in C# within Unity, leveraging Meta’s “Capture Image with AI” kit for rapid inference.

Demonstrating species ID, height marking and DBH capture with the Quest prototype—data uploaded seconds after the scan.

Measurement accuracy was average—errors of 8 to 12 percent compared with tape‑and‑clinometer values—but the speed was compelling. A complete record could be generated in under ten seconds and uploaded to a cloud dashboard. Short demonstration clips shared on professional social networks attracted unsolicited feedback from arborists who described the method as “good enough for preliminary surveys” and valuable for public‑engagement programmes where manual tools discourage volunteers.

ForestFlux addressed two limitations of Digital Gleaning. First, it focused on standing, living trees, not post‑harvest material, thereby aligning with contemporary forest‑management needs. Second, it moved beyond geometric surface matching to include biological and ecological parameters—species identity and carbon content—integrating them into a single measurement event.

6. Augmented Reality at Forest Scale

The Meta-Quest experiment demonstrated that a single tree can deliver useful field data in seconds, yet forest management decisions are rarely made one stem at a time. They concern watersheds, mixed-age stands, and economic or ecological outcomes measured in decades. While evaluating the limitations of my headset prototype I was invited to join a Snap Inc.–Royal College of Art collaboration that originally targeted urban-transport emissions. Early visual mock-ups, however, felt disconnected from tangible land assets. The chance to redirect the brief toward forestry arrived naturally: if real-time overlays can teach a commuter about diesel exhaust, they can also teach a park visitor how stand composition influences carbon storage.

Exhibition stand for the Tiny Forest AR project, showing visitors how virtual seedlings grow on real ground.

Under the revised concept, Tiny Forest, I acted as lead immersive-experience designer, working with interaction researcher Linc Yin and developer Bilal Ahmad. We chose the smartphone as the primary device because it requires no specialised headset and therefore lowers the adoption barrier for schools and local councils. The application workflow is sequential. A user opens the camera view, selects a patch of ground, and assigns a soil profile from a predefined library calibrated to UK Forestry Standard data. The app then lets the user plant virtual seeds of selected species. As the phone tracks the physical ground plane, seedlings appear, grow to maturity in accelerated time, and update a panel that lists live estimates of above-ground biomass, annual carbon sequestration, potential timber yield, and indicative maintenance cost. All calculations run locally using simplified allometric models and soil-species suitability indices published by Forest Research.

App demo: users plant a custom stand, then watch live carbon-storage and cost metrics update on screen.

Tiny Forest shifts the focus from stem-specific metrics to stand-level composition and makes explicit the trade-offs among species choice, site conditions, and long-term carbon yield. It complements the earlier headset prototype by addressing the educational layer between raw measurement and strategic management: once data exist, stakeholders still need a clear mental model of what the numbers imply at landscape scale.

7. Remote Sensing: Extended Coverage, Persistent Gaps

When Landsat 1 began circling Earth in 1972, forestry gained its first global vantage-point: blocky, false-colour images good enough to separate woodland from farmland. By the late 1980s aircraft were carrying laser scanners (LiDAR) that could measure canopy height in three dimensions, and pulse rates have since climbed high enough for today’s surveys to sample roughly ten points on every square metre of forest. National agencies now treat these tools as core infrastructure. England, for example, is compiling a continuous tree inventory from fixed-wing LiDAR and photogrammetry, aiming for full coverage by March 2025. At continental scale the European Space Agency’s Biomass satellite (launched 2024) sweeps 200-kilometre swaths with P-band radar and converts the echoes into above-ground-biomass maps.

The Guardian – 5 April 2025: “‘An exciting moment’: England’s urban and rural trees mapped for first time.”

Coverage this broad is unprecedented, yet several practical gaps remain. In 2023 the UK National Forest Inventory compared LiDAR-derived maps with ground plots one year after the 2022 heatwave. In stands younger than ten years the airborne data missed roughly one in five sapling deaths because desiccated crowns blended into surrounding vegetation for months. A similar issue appeared in Central Finland in 2024, where Biomass radar estimates for Norway spruce deviated by about 27 tonnes per hectare on sites hit recently by bark-beetle infestation; the pixel size (≈4 ha) simply averaged away early-stage damage.

My family’s hillside illustrates the same limitation at local scale. A typhoon in 2019 pushed three Japanese cedars across an access path, but no one noticed until a liability complaint drew relatives to the site ten months later. The satellite image taken that season showed no clear change at 10-metre resolution, and no aerial survey was due for another year. What we needed was a low-cost sensor on the ground to flag the fallen stems within days, not seasons.

In practice, then, remote platforms outline the forest while close-range instruments fill in the details. National LiDAR grids give reliable canopy models; smartphones, stem-mounted scanners and micro-climate probes supply the day-to-day status that determines whether interventions succeed. Blending those two layers, broad coverage from above and high-resolution data from within, is the operational definition of Human–Computer–Tree Interaction. Satellites are not replaced; they are complemented, ensuring that management actions arrive in time and at the right place.

8. EarthCode 1.0: Assigning a Verifiable ID to Every Tree

Effective in-situ monitoring begins with reliable verification. Just as people must present an official document before opening a bank account, a tree must present some stable marker before its growth data can be logged with confidence. Diameter tapes and painted plot numbers work for small research sites, but they are labour-intensive and prone to error at landscape scale. The question I set for my master’s graduation project was therefore precise: Can a standing tree provide its own biometric signature, readable by a field device in minutes and traceable across years?

Illustration—bark ridges form a fingerprint

My master’s capstone, EarthCode, set out to resolve this verification step by treating bark morphology as a biometric signature. Lenticel arrangements, ridge spacing and local swellings generate a texture field that—after appropriate filtering—remains stable for years while differing measurably from one stem to the next.

Prototype scanner mapping bark texture while on-device software converts point clouds into biometric descriptors.

A single scan of a tree’s bark section generates the entire ‘Bark-Code’

The outer bark proved to be an ideal substrate. Lenticel patterns, ridge spacing and local swellings form stable textures that differ measurably from one stem to the next while remaining recognisable over years of growth. The design challenge was to capture those textures quickly and at minimal cost so that family forest owners—who collectively manage almost one-third of the world’s forest area—could use the system without specialised training or expensive consumables.

Machine-learning workflow: individual lenticels segmented for training the bark-pattern classifier.

Early prototypes paired a smartphone’s LiDAR sensor with a short photogrammetry sequence. The user circled the trunk once, holding the phone at arm’s length, and the application fused the point cloud with high-resolution imagery. A lightweight convolutional model then extracted a “bark fingerprint,” a numerical vector short enough for offline storage yet distinctive enough to re-identify the same trunk at subsequent visits. In benchmark trials on 450 birch trees the method achieved just over 91 percent top-one accuracy; misclassifications mostly involved very young stems whose bark had not yet developed ridges.

Concept image: Mobile application in use—scanning a street tree to create or verify its digital profile.

Technical progress alone was not sufficient. Interviews with more than forty forest owners in Finland, the United Kingdom, the United States, Kenya and South Korea confirmed recurring constraints: sensor cost, maintenance effort and unfamiliar verification rules required by carbon-credit auditors. Owners repeatedly stated that any solution encouraging conservation had to “cost less than a logging truck and work with the phone already in my pocket.” Those conversations drove continuous simplification of the capture workflow.

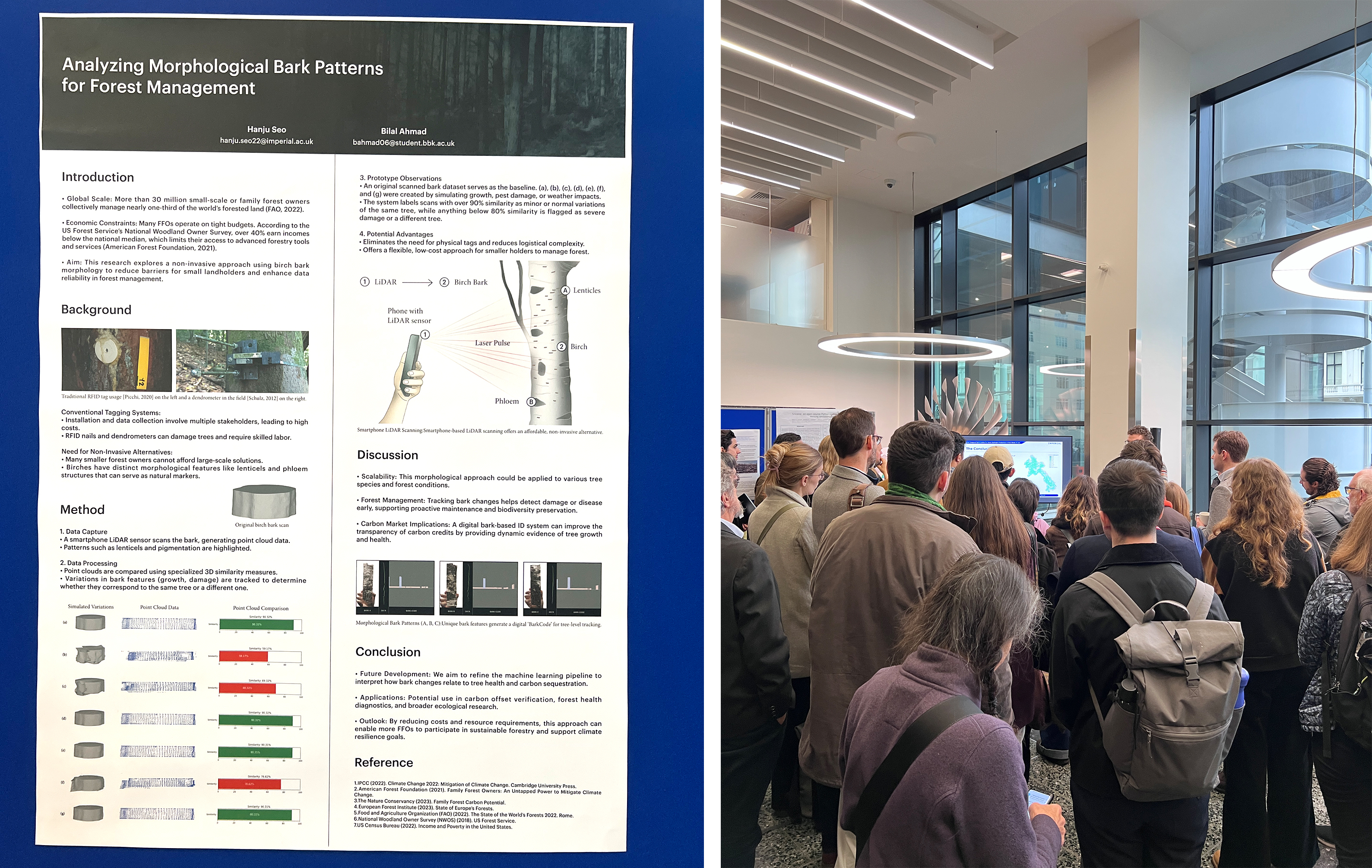

Poster “Analyzing Morphological Bark Patterns for Forest Management” on display at the Grantham Institute Climate Research Showcase (2025).

Preliminary results were presented as “Analyzing Morphological Bark Patterns for Forest Management” at the 2025 Grantham Institute Climate Research Showcase. The poster outlined three findings: first, bark morphology is sufficiently distinctive to serve as a biometric across multiple age classes of birch; second, smartphone-based scanning can deliver point clouds dense enough for reliable identification; and third, longitudinal comparison of successive scans can quantify radial growth within a millimetre, allowing non-invasive health checks.

EarthCode 1.0’s contribution is narrowly defined yet foundational: it supplies a persistent, machine-readable identity for every living stem, closing a verification gap that has long slowed the adoption of data-driven forestry by smallholders. By eliminating metal tags, reducing per-tree costs and working on hardware many foresters already own, the system lowers the barrier to systematic monitoring and prepares ground-based data to integrate seamlessly with remote-sensing layers discussed earlier in this essay.

EarthCode 1.0’s contribution is narrowly defined yet foundational: it supplies a persistent, machine-readable identity for every living stem, closing a verification gap that has long slowed the adoption of data-driven forestry by smallholders. By eliminating metal tags, reducing per-tree costs and working on hardware many foresters already own, the system lowers the barrier to systematic monitoring and prepares ground-based data to integrate seamlessly with remote-sensing layers discussed earlier in this essay.

9. Trees as Assets That Stay Standing

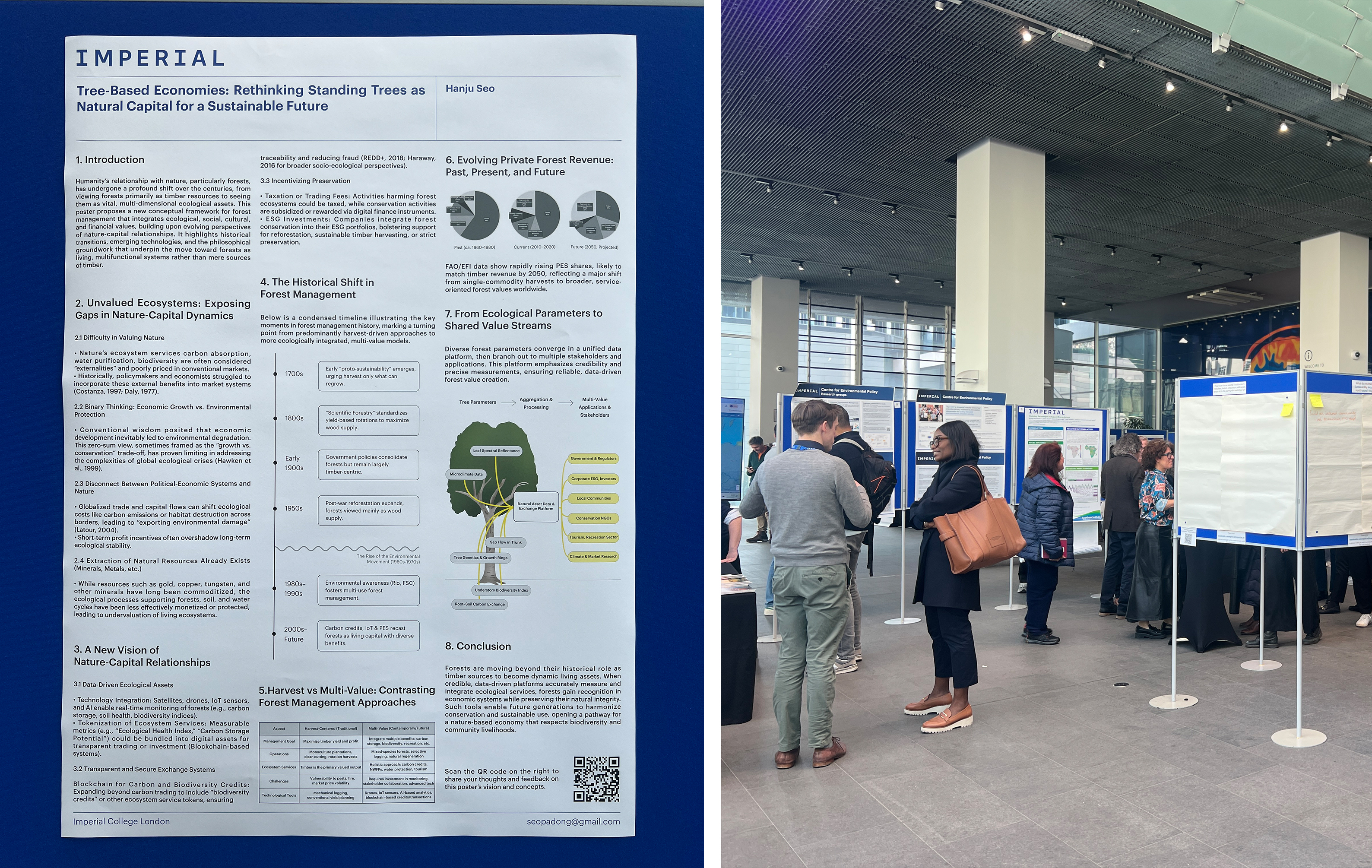

If a living tree can verify its own identity and document its own change, it becomes possible to treat that tree as an asset without harvesting it. This premise guided my poster “Tree-Based Economies: Rethinking Standing Trees as Natural Capital” for the Imperial Futures Exhibition. The work traces a historical arc: forests were once valued almost exclusively for timber; they are now recognised for climate regulation, watershed stability, biodiversity and cultural heritage. Yet these non-timber services rarely appear on conventional balance sheets because verification remains costly.

“Tree-Based Economies” poster presented at the Imperial Futures Exhibition(2025) —arguing for revenue models that keep forests standing.

The poster outlines three gaps. First, ecosystem benefits—carbon storage, habitat quality, water purification—are externalities in most market models, so ownership confers little direct financial reward for preserving them. Second, policy frameworks still frame growth and conservation as mutually exclusive, encouraging short-term extraction over long-term stewardship. Third, global capital flows shift ecological costs across borders, obscuring accountability.

Against that background the poster proposes a data-driven pathway in which each tree contributes verifiable metrics to a shared platform. Advances in satellite imagery, low-cost IoT sensors and EarthCode-style bark identification enable continuous measurement of biomass, growth rate and health status. These data streams can be tokenised—whether as carbon credits, biodiversity credits or multi-parameter ecosystem-service units—and exchanged on transparent ledgers. The mechanism resembles commodity markets for metals, but the underlying asset remains alive and productive.

A condensed timeline on the poster shows the transition from harvest-centred forestry in the nineteenth and twentieth centuries to payment-for-ecosystem-services schemes that began in the late 1990s. Current FAO and EFI figures indicate that payments for ecosystem services already account for nearly one fifth of private-forest revenue worldwide and could reach parity with timber by 2050. The poster concludes that reliable, low-cost field verification is the missing infrastructure; once in place, it aligns economic incentives with conservation outcomes and offers smallholders a realistic alternative to logging.

10. EarthCode Ltd. and the Road Ahead

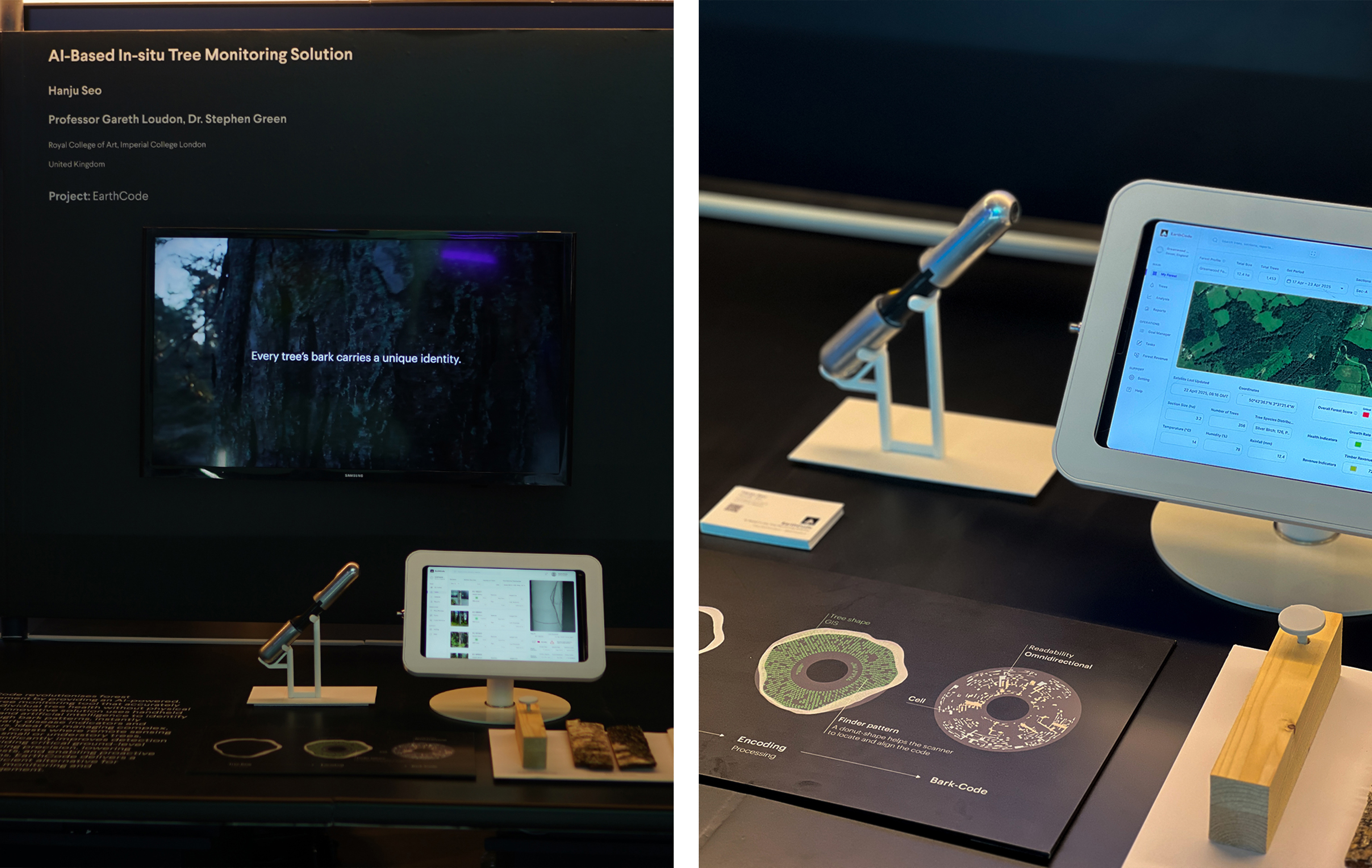

Academic prototypes demonstrate feasibility, but impact depends on deployment. In 2024 I incorporated EarthCode Ltd. to convert the verification research into practical tools for forest owners, insurers and regulatory bodies. The company’s first product line couples stem-mounted environmental probes with a mobile application that merges three data layers: satellite canopy models, EarthCode biometric IDs and real-time micro-climate readings. The system presents landowners with plain-language alerts—drought stress, pest risk, wind-throw probability—and generates audit-ready files compatible with emerging carbon-credit standards.

Tablet interface prototype: per-tree profiles with biometric ID, growth trend and health alerts.

We introduced the integrated platform at the Dubai AI Festival in April 2025 under the title “EarthCode: AI-Based In-Situ Tree-Monitoring Solution.” Discussions ranged from mitigating heat stress in Emirati date palms to monitoring pine wilt outbreaks in Korean mountains. The breadth of interest reinforced the need for ground-truth tools that complement, rather than replace, remote sensing.

EarthCode booth at the Dubai AI Festival (April 2025) showcasing EarthCode 1.0 and analytics dashboards.

The company’s long-term objective is broader than forestry. Under the banner “Smarter Earth Monitoring” we aim to extend the verification-and-alert model to other living infrastructure: mangrove belts that shield coastlines, urban trees that moderate heat islands, and agro-forestry systems that integrate food and fibre. The near-term roadmap includes species-agnostic descriptor models, an open API for third-party analytics and pilot projects with NGOs to deploy low-cost kits in community forests.

My personal aim remains constant: to embed every tree that people rely on into a living information network so that care becomes routine rather than reactive. Achieving that goal will require iterating hardware in the field, refining algorithms with diverse datasets and aligning with financial frameworks that reward preservation. EarthCode Ltd. exists to carry out that work in real time, translating research insight into operational change.

11. Epilogue – Toward a Planet-Scale Internet of Living Things

Colleagues sometimes suggest that my attention is too narrow. Climate technology, they argue, spans satellites, power grids and urban analytics—why concentrate on trees? My reply is consistent: innovation is constrained less by breadth than by depth. A single mature tree operates across chemical, mechanical and social timescales; wiring such complexity into human infrastructure demands sustained focus.

That focus is strategic, not compulsive. Each stem that can confirm its identity, report its moisture loss or flag an early pest attack shifts resource management from periodic inspection to continuous dialogue. When neighbourhood families can review the daily growth curve of a municipal oak as easily as they check a weather forecast, the interface between biological systems and computation will have matured.

Reaching that point requires incremental engineering. Progress will come from repeated field trials, data reconciliation and revised protocols, not from a single breakthrough. I will therefore keep refining enclosures, rewriting algorithms and walking uneven forest ground with batteries that drain faster than planned. The objective remains unchanged: integrate every tree humans depend on into a reliable information network so that stewardship becomes routine rather than reactive.

Ongoing field work—measuring live stems as the research and product loop continues.

Credits & Acknowledgements

Author & Principal Investigator

Hanju Seo — Research, writing, photography, design prototypes, and overall curation of this journal.

Academic Advisors

Prof. Gareth Loudon — Royal College of Art, MSc/MA Innovation Design Engineering

Dr. Stephen Green — Imperial College London, MSc/MA Innovation Design Engineering

Key Collaborators

Bilal Ahmad — Machine-learning models, point-cloud processing, Tiny Forest development

Zumeng Liu, Dzhamal Alanov, Congee — Unity coding and UX testing for ForestFlux prototype

Linc Yin — Interaction research for Tiny Forest

Institutional Support

Royal College of Art — Field-study grant (Aalto University Summer School)

Imperial College London, Grantham Institute — Poster showcase and research feedback

Snap Inc. × RCA Project — AR concept incubation

Meta, PwC, Innovate UK — XR Hackathon resources

Supporting Organisations

Bentley Motors, Dezeen, IKEA Korea, Embassy of Sweden in Seoul, Korea Institute of Design Promotion (KIDP), Prototypes for Humanity, Dubai International Financial Centre (DIFC), WIRED Japan